主成分分析也称主分量分析,旨在利用降维的思想,把多指标转化为少数几个综合指标(即主成分),其中每个主成分都能够反映原始变量的大部分信息,且所含信息互不重复。这种方法在引进多方面变量的同时将复杂因素归结为几个主成分,使问题简单化,同时得到的结果更加科学有效的数据信息。

使用梯度上升法求解主成分

//准备数据import numpy as npimport matplotlib.pyplot as plt X = np.empty((100,2)) X[:,0] = np.random.uniform(0.,100.,size=100) X[:,1] = 0.75*X[:,0]+3.+np.random.normal(0,10.,size=100) plt.scatter(X[:,0],X[:,1]) plt.show

// 数据demean过程def demean(X): return X - np.mean(X,axis=0)

#效用函数def f(w,X): return np.sum((X.dot(w)**2))/len(X)#效用函数导函数def df_math(w,X): return X.T.dot(X.dot(w))*2./len(X)#测试导函数是否正确def df_debug(w,x,epsilon=0.0001): res = np.empty(len(w)) for i in range(len(w)): w_1 = w.copy() w_1[i] += epsilon w_2 = w.copy() w_2[i] -= epsilon res[i] = (f(w_1,X)-f(w_2,X))/(2*epsilon) return res#使w变为单位向量def direction(w): return w/np.linalg.norm(w)#梯度上升def gradient_ascent(df,X,initial_w,eta,n_iters=1e4,epsilon=1e-8): cur_iter = 0 w = direction(initial_w) while cur_iter<n_iters: gradient = df(w,X) last_w = w w = w + eta*gradient w = direction(w) #每次计算后都应该将w转变为单位向量 if(abs(f(w,X) - f(last_w,X))<epsilon): break cur_iter +=1 return w

initial_w = np.random.random(X.shape[1]) #不能使用0向量作为初始向量,因为0向量本身是一个极值点eta = 0.01 gradient_ascent(df_debug,X,initial_w,eta) gradient_ascent(df_math,X,initial_w,eta)

求解数据的前n个主成分

def first_n_components(n,X,eta=0.01,n_iters=1e4,epsilon=1e-8): X_pca = X.copy() X_pca = demean(X_pca) res = [] for i in range(n): initial_w = np.random.random(X_pca.shape[1]) w = first_component(df_math,X_pca,initial_w,eta) res.append(w) /* for i in range(len(X)): X2[i] = X[i] -X[i].dot(w)*w */ X_pca = X_pca - X_pca.dot(w).reshape(-1,1)*w return res

封装PCA

# _*_ encoding:utf-8 _*_import numpy as npclass PCA: def __init__(self,n_components): self.n_components = n_components self.components_ = None def fit(self,X,eta=0.01,n_iters=1e4): def demean(X): return X - np.mean(X, axis=0) # 效用函数 def f(w, X): return np.sum((X.dot(w) ** 2)) / len(X) # 效用函数导函数 def df(w, X): return X.T.dot(X.dot(w)) * 2. / len(X) def direction(w): return w / np.linalg.norm(w) def first_component(df, X, initial_w, eta, n_iters=1e4, epsilon=1e-8): cur_iter = 0 w = direction(initial_w) while cur_iter < n_iters: gradient = df(w, X) last_w = w w = w + eta * gradient w = direction(w) # 每次计算后都应该将w转变为单位向量 if (abs(f(w, X) - f(last_w, X)) < epsilon): break cur_iter += 1 return w def first_n_components(n, X, eta=0.01, n_iters=1e4, epsilon=1e-8): X_pca = X.copy() X_pca = demean(X_pca) res = [] for i in range(n): initial_w = np.random.random(X_pca.shape[1]) w = first_component(df, X_pca, initial_w, eta) res.append(w) X_pca = X_pca - X_pca.dot(w).reshape(-1, 1) * w return res X_pca = demean(X) self.components_ = np.empty(shape=(self.n_components,X.shape[1])) self.components_ = first_n_components(self.n_components,X) self.components_ = np.array(self.components_) return self def transform(self,X): return X.dot(self.components_.T) def inverse_transform(self,X): return X.dot(self.components_) def __repr__(self): return "PCA(n_components=%d)" %self.n_components

scikit-learn中的PCA

scikit-learn中的PCA没有使用梯度上升法求解主成分,因此使用sklearn中的PCA求解的主成分是与我们求解的向量方向是相反的

from sklearn.decomposition import PCA pca = PCA(n_components=1) pca.fit(X) X_transform = pca.transform(X) X_restore = pca.inverse_transform(X_transform) plt.scatter(X[:,0],X[:,1],color='b',alpha=0.5) plt.scatter(X_restore[:,0],X_restore[:,1],color='r',alpha=0.5)

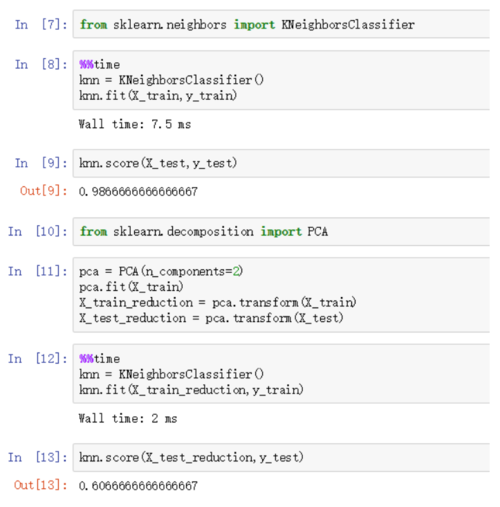

使用PCA处理digits数据集

从图中可以看到,使用PCA将digits数据集的数据维度降低到二维后,knn算法的fit时间降低很多,而score准确率却下降到0.6

pca = PCA(n_components=X_train.shape[1]) pca.fit(X_train) pca.explained_variance_ratio_ #合并某一维度之后的对数据方差的损失后的正确率plt.plot([i for i in range(X.shape[1])],[np.sum(pca.explained_variance_ratio_[:i+1]) for i in range(X_train.shape[1])])

pca = PCA(0.95) pca.fit(X_train) pca.n_components_ -> pca.n_components = 28#即降低到28个维度后有原数据95%的正确率

作者:冰源_63ad

链接:https://www.jianshu.com/p/b20feae17536

随时随地看视频

随时随地看视频