编程作业: Regularization梯度值计算出错

# GRADED FUNCTION: gradient_check

def gradient_check(x, theta, epsilon = 1e-7):

"""

Implement the backward propagation presented in Figure 1.

Arguments:

x -- a real-valued input

theta -- our parameter, a real number as well

epsilon -- tiny shift to the input to compute approximated gradient with formula(1)

Returns:

difference -- difference (2) between the approximated gradient and the backward propagation gradient

"""

# Compute gradapprox using left side of formula (1). epsilon is small enough, you don't need to worry about the limit.

### START CODE HERE ### (approx. 5 lines)

thetaplus = theta+0.01 # Step 1

thetaminus = theta-0.01 # Step 2

J_plus = forward_propagation(x,thetaplus) # Step 3

J_minus = forward_propagation(x,thetaminus) # Step 4

gradapprox = (J_plus-J_minus)/(2*0.01) # Step 5

### END CODE HERE ###

# Check if gradapprox is close enough to the output of backward_propagation()

### START CODE HERE ### (approx. 1 line)

grad = backward_propagation(x,theta)

### END CODE HERE ###

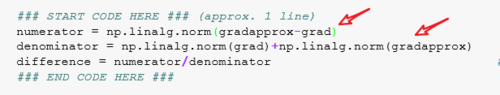

### START CODE HERE ### (approx. 1 line)

numerator = np.linalg.norm(gradapprox-grad) # Step 1'

denominator = np.linalg.norm(grad)+np.linalg.norm(gradapprox) # Step 2'

difference = numerator/denominator # Step 3'

### END CODE HERE ###

if difference < 1e-7:

print ("The gradient is correct!")

else:

print ("The gradient is wrong!")

return difference

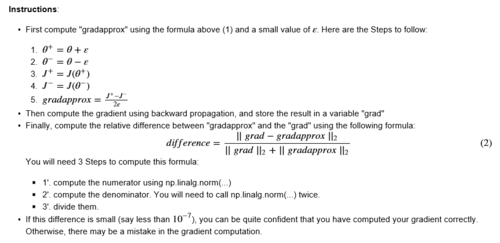

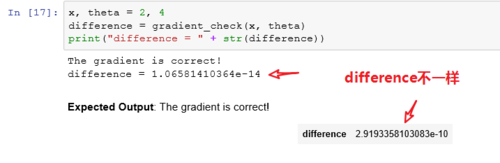

我怀疑是下面图的地方出错了,但不知道怎么修改呀?

解决:

epsilon = 1e-7应该被使用,而不是0.01

无无法师

无无法师浏览 2149回答 0

0回答

随时随地看视频慕课网APP

随时随地看视频慕课网APP

Python

Python

大数据

大数据