在学习hadoop课程中,讲师介绍了hadoop的单机以及集群部署方式,由于本地资源限制,只有一台虚拟机,所以考虑使用docker的方式实现分布式集群搭建。

准备

下载centos镜像: docker pull centos

启动一个容器:docker run -td --name base -i centos bash

进入容器,安装jdk及hadoop,方法和主机一样

容器中安装ssh:yum install openssh-server openssh-clients

设置免密登录:

ssh-keygen -t rsa cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

保存容器修改:docker commit base hadoop:4

配置

新建目录结构如下,只需要创建两个文件:docker-compose.yml以及Dockerfile,其他文件夹是启动后自动生成的

docker-compose.yml

version: '2.0' services: hadoop01: build: . container_name: "hadoop01" volumes: - ./hadoop01:/data/hadoop_repo tty: true privileged: true hostname: hadoop01 ports: - "9870:9870" - "8088:8088" hadoop02: image: "hadoopdockercluster_hadoop01" container_name: "hadoop02" tty: true privileged: true hostname: hadoop02 volumes: - ./hadoop02:/data/hadoop_repo hadoop03: image: "hadoopdockercluster_hadoop01" container_name: "hadoop03" tty: true privileged: true hostname: hadoop03 volumes: - ./hadoop03:/data/hadoop_repo

Dokerfile

FROM hadoop:4 ENV JAVA_HOME=/opt/jdk1.8.0_181 ENV HADOOP_HOME=/opt/hadoop-3.2.0-cluster ENV PATH=.:$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$JAVA_HOME/bin CMD ["/usr/sbin/sshd","-D"]

启动

docker-compose build docker-compose up -d docker exec -it hadoop01 bash 格式化HDFS: hdfs namenode -format 启动: start-all.sh

验证

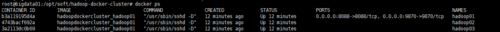

查看容器列表

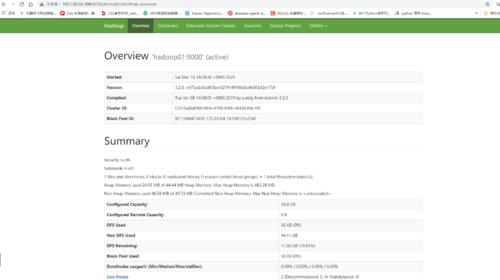

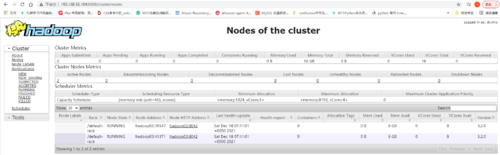

前台访问:

hdfs: http://192.168.56.104:9870/

yarn: http://192.168.56.104:8088/

随时随地看视频

随时随地看视频

热门评论

-

慕尼黑78955412021-12-24 0

查看全部评论你好,img资源没加载