前言

近期本人在某云上购买了个人域名,本想着以后购买与服务器搭建自己的个人网站,由于需要筹备的太多,暂时先搁置了,想着先借用GitHub Pages搭建一个静态的站,搭建的过程其实也曲折,主要是域名地址配置把人搞废了,不过总的来说还算顺利,网站地址 https://chenchangyuan.cn(空博客,样式还挺漂亮的,后期会添砖加瓦)

利用git+npm+hexo,再在github中进行相应配置,网上教程很多,如果有疑问欢迎评论告知。

本人以前也是搞过几年java,由于公司的岗位职责,后面渐渐地被掰弯,现在主要是做前端开发。

所以想利用java爬取文章,再将爬取的html转化成md(目前还未实现,欢迎各位同学指导)。

1.获取个人博客所有url

查看博客地址https://www.cnblogs.com/ccylovehs/default.html?page=1

根据你自己写的博客数量进行遍历

将博客的详情页地址存放在set集合中,详情页地址https://www.cnblogs.com/ccylovehs/p/9547690.html

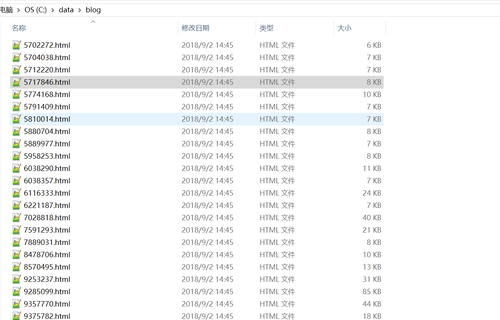

2.详情页url生成html文件

遍历set集合,依次生成html文件

文件存放在C://data//blog目录下,文件名由捕获组1生成

3.代码实现

package com.blog.util;import java.io.BufferedReader;import java.io.File;import java.io.InputStreamReader;import java.io.OutputStreamWriter;import java.io.PrintStream;import java.net.HttpURLConnection;import java.net.URL;import java.util.Iterator;import java.util.Set;import java.util.TreeSet;import java.util.regex.Matcher;import java.util.regex.Pattern;/**

* @author Jack Chen

* */public class BlogUtil { /**

* URL_PAGE:cnblogs url

* URL_PAGE_DETAIL:详情页url

* PAGE_COUNT:页数

* urlLists:所有详情页url Set集合(防止重复)

* p:匹配模式

* */

public final static String URL_PAGE = "https://www.cnblogs.com/ccylovehs/default.html?page="; public final static String URL_PAGE_DETAIL = "https://www.cnblogs.com/ccylovehs/p/([0-9]+.html)"; public final static int PAGE_COUNT = 3; public static Set<String> urlLists = new TreeSet<String>(); public final static Pattern p = Pattern.compile(URL_PAGE_DETAIL);

public static void main(String[] args) throws Exception { for(int i = 1;i<=PAGE_COUNT;i++) {

getUrls(i);

} for(Iterator<String> i = urlLists.iterator();i.hasNext();) {

createFile(i.next());

}

}

/**

* @param url

* @throws Exception */

private static void createFile(String url) throws Exception {

Matcher m = p.matcher(url);

m.find();

String fileName = m.group(1);

String prefix = "C://data//blog//";

File file = new File(prefix + fileName);

PrintStream ps = new PrintStream(file);

URL u = new URL(url);

HttpURLConnection conn = (HttpURLConnection) u.openConnection();

conn.connect();

BufferedReader br = new BufferedReader(new InputStreamReader(conn.getInputStream(), "utf-8"));

String str;

while((str = br.readLine()) != null){

ps.println(str);

}

ps.close();

br.close();

conn.disconnect();

}

/**

* @param idx

* @throws Exception */

private static void getUrls(int idx) throws Exception{

URL u = new URL(URL_PAGE+""+idx);

HttpURLConnection conn = (HttpURLConnection) u.openConnection();

conn.connect();

BufferedReader br = new BufferedReader(new InputStreamReader(conn.getInputStream(), "utf-8"));

String str; while((str = br.readLine()) != null){ if(null != str && str.contains("https://www.cnblogs.com/ccylovehs/p/")) {

Matcher m = p.matcher(str); if(m.find()) {

System.out.println(m.group(1));

urlLists.add(m.group());

}

}

}

br.close();

conn.disconnect();

}

}原文出处:https://www.cnblogs.com/ccylovehs/p/9574084.html

随时随地看视频

随时随地看视频