一 Hadoop运行模式

(1)本地模式(默认模式): 不需要启用单独进程,直接可以运行, 测试和开发时使用。

(2)伪分布式模式: 等同于完全分布式,只有一个节点。

(3)完全分布式模式:多个节点一起运行。

下面是官网给出的原文:

This will display the usage documentation for the hadoop script.

Now you are ready to start your Hadoop cluster in one of the three supported modes:

二 官网提供案例

1) grep

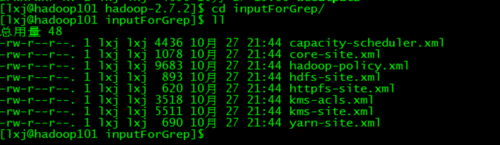

首先创建inputForGrep目录存放输入文件

cp etc/hadoop/*.xml inputForGrep/ 将hadoop下面的所有xml文件cp到输入文件下面用于处理

执行以下grep命令

查看运行结果

2) wordcount

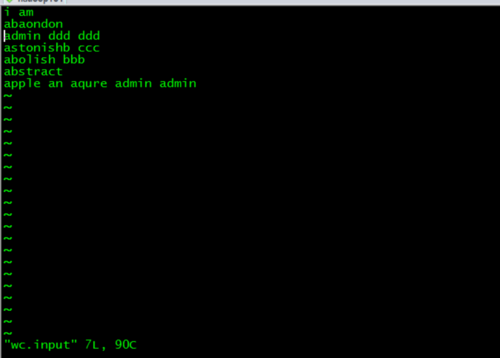

创建wcinput

创建wc.input

执行wordcount命令

查看运行结果:

三 查看源码

通过反编译查看运行grep和wordcount的源码,如下:

package org.apache.hadoop.examples;

import java.io.PrintStream;

import java.util.Random;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.LongWritable.DecreasingComparator;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.SequenceFileInputFormat;

import org.apache.hadoop.mapreduce.lib.map.InverseMapper;

import org.apache.hadoop.mapreduce.lib.map.RegexMapper;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat;

import org.apache.hadoop.mapreduce.lib.reduce.LongSumReducer;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class Grep

extends Configured

implements Tool

{

public int run(String[] args)

throws Exception

{

if (args.length < 3)

{

System.out.println("Grep <inDir> <outDir> <regex> [<group>]");

ToolRunner.printGenericCommandUsage(System.out);

return 2;

}

Path tempDir = new Path("grep-temp-" + Integer.toString(new Random().nextInt(Integer.MAX_VALUE)));

Configuration conf = getConf();

conf.set(RegexMapper.PATTERN, args[2]);

if (args.length == 4) {

conf.set(RegexMapper.GROUP, args[3]);

}

Job grepJob = Job.getInstance(conf);

try

{

grepJob.setJobName("grep-search");

grepJob.setJarByClass(Grep.class);

FileInputFormat.setInputPaths(grepJob, args[0]);

grepJob.setMapperClass(RegexMapper.class);

grepJob.setCombinerClass(LongSumReducer.class);

grepJob.setReducerClass(LongSumReducer.class);

FileOutputFormat.setOutputPath(grepJob, tempDir);

grepJob.setOutputFormatClass(SequenceFileOutputFormat.class);

grepJob.setOutputKeyClass(Text.class);

grepJob.setOutputValueClass(LongWritable.class);

grepJob.waitForCompletion(true);

Job sortJob = Job.getInstance(conf);

sortJob.setJobName("grep-sort");

sortJob.setJarByClass(Grep.class);

FileInputFormat.setInputPaths(sortJob, new Path[] { tempDir });

sortJob.setInputFormatClass(SequenceFileInputFormat.class);

sortJob.setMapperClass(InverseMapper.class);

sortJob.setNumReduceTasks(1);

FileOutputFormat.setOutputPath(sortJob, new Path(args[1]));

sortJob.setSortComparatorClass(LongWritable.DecreasingComparator.class);

sortJob.waitForCompletion(true);

}

finally

{

FileSystem.get(conf).delete(tempDir, true);

}

return 0;

}

public static void main(String[] args)

throws Exception

{

int res = ToolRunner.run(new Configuration(), new Grep(), args);

System.exit(res);

}

}package org.apache.hadoop.examples;

import java.io.IOException;

import java.io.PrintStream;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Mapper.Context;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Reducer.Context;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount

{

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>

{

private static final IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Mapper<Object, Text, Text, IntWritable>.Context context)

throws IOException, InterruptedException

{

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens())

{

this.word.set(itr.nextToken());

context.write(this.word, one);

}

}

}

public static class IntSumReducer

extends Reducer<Text, IntWritable, Text, IntWritable>

{

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values, Reducer<Text, IntWritable, Text, IntWritable>.Context context)

throws IOException, InterruptedException

{

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

this.result.set(sum);

context.write(key, this.result);

}

}

public static void main(String[] args)

throws Exception

{

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length < 2)

{

System.err.println("Usage: wordcount <in> [<in>...] <out>");

System.exit(2);

}

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

for (int i = 0; i < otherArgs.length - 1; i++) {

FileInputFormat.addInputPath(job, new Path(otherArgs[i]));

}

FileOutputFormat.setOutputPath(job, new Path(otherArgs[(otherArgs.length - 1)]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

随时随地看视频

随时随地看视频